CES 2026: What We Saw, What’s Shifting, and Where Immersive Experiences Are Headed

CES 2026 marked a clear shift:

- The most important conversations moved from what’s possible to what can realistically scale across devices, workflows, and real product roadmaps

- Immersive experiences — including how Leia Inc. is now being engaged — are increasingly being evaluated as platforms integrated into products and workflows, not as standalone 3D display components

- The next phase is about interaction and scale, not entirely new ecosystems

This article breaks down what that shift looked like on the show floor and why it matters as the industry heads toward MWC.

From Possibility to Deployment

One of the most noticeable changes at CES was the maturity of the conversation. The question is no longer whether immersive or spatial experiences are possible. That phase has largely passed. The focus now is on deployment, efficiency, and compatibility with what already exists.

This shift was echoed across the show floor in a growing emphasis on hybrid intelligence — combining on-device processing with cloud-based systems. Rather than positioning edge AI as a replacement for the cloud, many announcements reflected a balance between real-time, on-device responsiveness and cloud-scale intelligence operating in the background. That balance matters for immersive experiences, where latency, immediacy, and spatial responsiveness must feel natural in the moment.

Device makers are under pressure to deliver meaningful differentiation without increasing cost, power consumption, or development complexity. They are looking for solutions that align with existing hardware roadmaps, integrate into established software workflows, and can scale across millions, or even billions, of devices.

At the same time, the appetite for entirely new platforms or disconnected experiences is shrinking. Across the industry, several high-profile players have begun pulling back from standalone immersive strategies that require dedicated hardware or isolated ecosystems. The momentum is clearly shifting toward approaches that enhance familiar devices and content rather than asking users to migrate to entirely new environments.

What stood out was a shared understanding that the next phase of immersive experiences will not come from building parallel ecosystems. Progress will come from unlocking more value from the devices people already use every day — including phones, tablets, laptops, and displays — by making them more spatially aware and expressive.

Immersive Experiences in Practice

That shift toward practicality was reflected directly in the conversations we had in our CES suite. The strongest reactions did not come from abstract concepts, but from familiar tools and real workflows shown in a new context. Seeing immersive capability applied to software and platforms people already know helped move the conversation from possibility to relevance.

In content creation, demos built around established tools like Blender and DaVinci Resolve resonated strongly. Being able to review depth, motion, and spatial composition during the creative process, rather than only after export, sparked meaningful discussions with creators and platform teams. The value was not framed as visual spectacle, but as better judgment earlier in the workflow, when creative decisions still matter most.

Gaming also emerged as one of the clearest, most visible signals on the CES show floor. Across booths and demos, high-performance displays, wider fields of view, and increased visual realism were front and center, particularly in experiences showcased by companies like Samsung. What stood out was how depth, motion, and responsiveness combined to create a stronger sense of presence without changing how games are built or played. These demonstrations reinforced a broader CES trend: immersion is becoming a competitive advantage for gaming platforms, driven by display capability rather than entirely new content ecosystems.

Experiential content and interaction were another area where immersive capability felt especially tangible. In our CES suite, demos with Greg Fodor and Portal VR showed how full VR experiences can be played on an Immersity display using standard VR controllers, without requiring a headset. By bringing the SteamVR ecosystem to an immersive display, users were able to move, interact, and engage naturally while remaining present in their surroundings.

Importantly, this approach unlocked immediate access to thousands of existing games and applications. Rather than requiring new content or new controls, immersive interaction was extended to a broad library of existing experiences simply by rethinking how and where those experiences are delivered. The response reflected growing interest in flexible immersive experiences that preserve familiar content and controls while expanding where immersive interaction can take place.

Communication and collaboration use cases generated similar momentum. Immersive displays can convey presence, spatial context, and nuance in ways traditional video often struggles to capture, especially in conversations where visual depth, eye contact, and shared context matter.

Importantly, these experiences were not positioned as replacements for existing tools, but as natural extensions of how people already connect and collaborate. With Immersity, immersive video conferencing can be embedded into familiar platforms such as Zoom and Microsoft Teams, enhancing meetings without requiring new software, workflows, or user behavior.

The result is a more expressive and human form of communication that fits naturally into existing enterprise environments. Rather than asking teams to adopt new tools, immersive capability augments the ones they already rely on, making collaboration feel more present and intuitive while remaining scalable across devices and organizations.

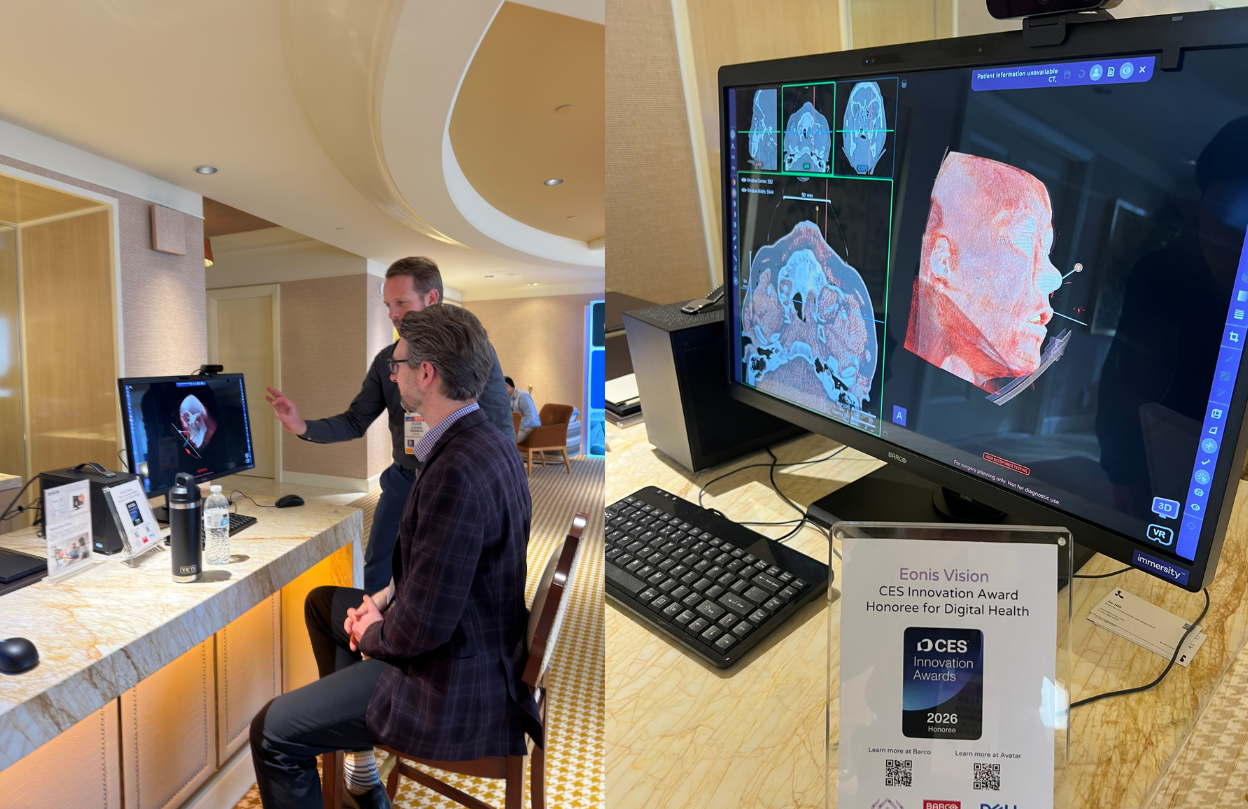

In professional and medical contexts, demos involving partners like Barco and Avatar Medical showed how spatial visualization can improve understanding, not just presentation. At CES, their Eonis Vision solution was recognized as a CES Innovation Awards® 2026 Honoree in the Digital Healthcare category, highlighting how immersive displays can transform complex imaging into intuitive, depth-rich visualizations that both clinicians and patients can interpret together. Whether reviewing anatomy in lifelike 3D or visualizing depth-critical information that flat screens often struggle to convey, immersive displays helped shift conversations toward clarity and confidence rather than complexity or novelty.

Immersive video experiences also drew attention, particularly when paired with recognizable environments and content types. Enhancing live performance footage, cinematic content, and media playback with depth and motion, without requiring new pipelines or formats, helped reframe immersion as something additive. Seeing this on familiar devices made it clear that immersive experiences can evolve naturally alongside existing media consumption habits.

Across all these demos, the common thread was familiarity. The experiences felt intuitive because they aligned with existing software, workflows, and user expectations. That familiarity is what makes immersive capability viable on a scale, and why these use cases resonated so strongly throughout the week.

What Comes Next

Looking ahead, several trajectories feel increasingly clear. Established creative and productivity tools will continue to evolve, supporting spatial interaction without forcing users to relearn familiar workflows. OEMs will drive adoption by embedding immersive capability directly into product lines rather than positioning it as an add-on. The most impactful immersive experiences will not feel futuristic. They will feel natural because they integrate seamlessly into everyday use.

The takeaway from CES 2026 is not that immersive experiences are coming someday. The shift toward immersive content experiences is already underway.

CES served as a reminder that meaningful change does not always arrive with spectacle. It often arrives through alignment between hardware and software, between creators and platforms, and between ambition and execution.

At Immersity, that focus remains central. We are working to turn today’s screens into more capable, spatially aware interfaces that support how people create, communicate, and experience content.

The conversations from CES were just the beginning. We look forward to continuing them in Barcelona this March at MWC.

Keep Reading

View AllJoin Our Newsletter

Stay up to date with the latest news and updates.